The U.S. seems unready for the flood of AI-generated imitations(deepfakes), despite years of warnings from think tanks.

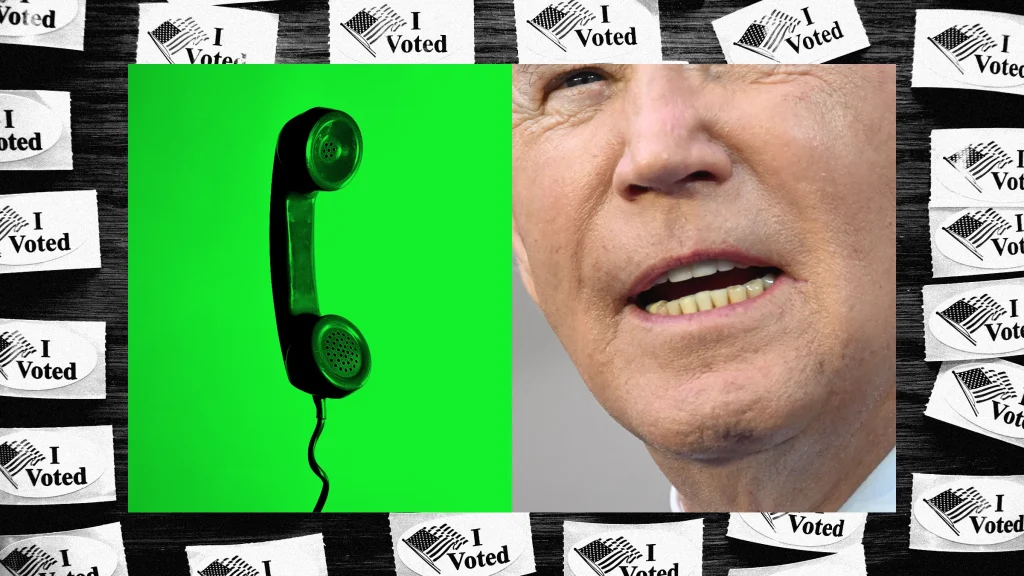

Over the weekend of January 20-21, residents in New Hampshire received peculiar political robo-calls. The automated messages, which sounded remarkably like the voice of U.S. President Joe Biden, advised many not to participate in the January 23 primary.

The automated messages, it seems, were produced by an artificial intelligence (AI) deepfake tool, suggesting an apparent intent to interfere in the 2024 presidential election.

As per audio recordings obtained by NBC, residents were instructed to refrain from going to the polls during the primary:

“Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again. Your vote makes a difference in November, not this Tuesday.”

The state’s attorney general’s office released a statement condemning the calls as misinformation, emphasizing that “New Hampshire voters should disregard the content of this message entirely.” In the meantime, a spokesperson for former President Donald Trump refuted any connection from the GOP candidate or his campaign.

Investigators have not yet pinpointed the source of the robocalls, but ongoing investigations are in progress.

In a connected development, another political scandal involving deepfake audio unfolded over the weekend. On January 21, AI-generated audio surfaced, mimicking the voice of Manhattan Democrat leader Keith Wright. The deepfake audio involved an imitation of Wright’s voice engaging in derogatory talk about fellow Democratic Assembly member Inez Dickens.

As reported by Politico, while some dismissed the audio as fake, at least one political insider was briefly persuaded that it was authentic.

Manhattan Democrat and former City Council Speaker Melissa Mark-Viverito informed Politico that initially, they believed the fake audio to be credible:

“I was like ‘oh shit.’ I thought it was real.”

Experts suggest that malicious actors prefer audio fakes over videos because consumers tend to be more discerning when it comes to visual deception. According to AI advisor Henry Ajder, as recently mentioned in the Financial Times, “everyone’s used to Photoshop or at least knows it exists.”

As of the time of publishing this article, there doesn’t seem to be a universal method for detecting or deterring deepfakes. Experts advise exercising caution when dealing with media from unfamiliar or questionable sources, particularly when extraordinary claims are involved.