An association supported by Adobe aims to assist organizations within the nation by establishing an AI standard.

India has a deep-rooted tradition of harnessing technology to shape public opinion, now standing at the forefront of a global conversation about the ethical use of AI in politics and democracy. Amidst this backdrop, tech giants, the very architects of these technologies, are stepping in to offer remedies.

In a significant move earlier this year, Andy Parsons, an influential figure at Adobe who spearheads the company’s involvement in the Content Authenticity Initiative, visited India. His journey wasn’t just a business trip but a mission to connect with local media and technology communities. He aimed to introduce and advocate for the adoption of innovative tools that help discern and signal AI-generated content, embedding a layer of trust and transparency in digital communication.

“Instead of detecting what’s fake or manipulated, we as a society, and this is an international concern, should start to declare authenticity, meaning saying if something is generated by AI that should be known to consumers,” Parsons stated in an interview. He highlighted a shift in focus towards confirming authenticity, especially in revealing AI-generated content to users.

He further mentioned that while some Indian companies are not yet part of the Munich AI election safety accord, which includes OpenAI, Adobe, Google, and Amazon, there is an interest in forming a similar alliance in India. “Legislation is a very tricky thing. To assume that the government will legislate correctly and rapidly enough in any jurisdiction is something hard to rely on. It’s better for the government to take a very steady approach and take its time,” Parsons added, expressing skepticism about relying solely on government legislation and advocating for a measured approach.

Detection tools have their limitations and inconsistencies, yet they’re considered a foundational step towards addressing some of the challenges in digital content verification.

Parsons elaborated on this during his visit to Delhi: “The concept is already well understood,” he asserted. “My role is to boost awareness that these tools aren’t just theoretical. They’re not mere concepts. They’ve been developed and are already in use.”

The Content Authenticity Initiative (CAI), advocating for royalty-free, open standards to discern whether digital content is machine-generated or human-made, was established before the surge in generative AI popularity. Initiated in 2019, it now boasts 2,500 members, encompassing tech giants like Microsoft, Meta, and Google, as well as leading media outlets such as The New York Times, The Wall Street Journal, and the BBC.

Parallel to the burgeoning industry that utilizes AI to produce media, a smaller, focused sector is emerging. This sector aims to address and mitigate some of the potentially harmful uses of AI in content creation.

In February 2021, Adobe escalated its commitment to authenticity by co-founding the Coalition for Content Provenance and Authenticity (C2PA) alongside ARM, BBC, Intel, Microsoft, and Truepic. This coalition is dedicated to creating an open standard that utilizes metadata from various media types to trace their origins, detailing the creation time, location, and any alterations. The CAI collaborates with the C2PA to promote this standard broadly.

Currently, the coalition is proactively working with governments, including India’s, to expand the standard’s adoption. This effort aims to clarify the origins of AI-generated content and to assist in formulating guidelines for the responsible progression of AI technology.

Adobe, a dominant force in the creative tools sector with products like Photoshop and Lightroom, is venturing into AI without creating its own large language models (LLMs). While introducing AI features into new and existing products, including the AI content creator Firefly, Adobe is at a crossroads. Its future success hinges on integrating AI to maintain industry leadership while ensuring its innovations don’t contribute to misinformation.

In India, the situation is complex.

Google is testing its AI tool Gemini’s restrictions on election content, political parties use AI to generate misleading content, and Meta has launched a deepfake helpline on WhatsApp, reflecting the platform’s role in spreading AI-generated content. Amidst this, India’s government has eased AI regulations to boost innovation, a move that could significantly influence the AI landscape amidst growing global concerns about AI safety.

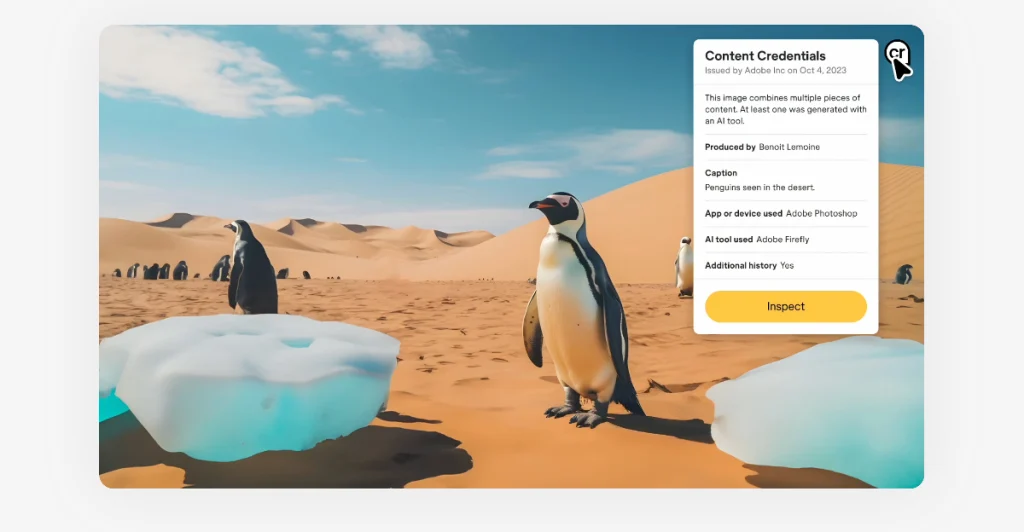

The C2PA has introduced a “digital nutrition label” for content, known as Content Credentials, leveraging its open standard. This label aims to inform users about the origin of content and whether it’s AI-generated. Adobe has integrated Content Credentials into its creative suite, including Photoshop and Lightroom, and this feature is also automatically applied to content produced by Adobe’s AI model, Firefly. Additionally, Leica has embedded Content Credentials in its new camera, and Microsoft has incorporated this feature into all AI-generated images from Bing Image Creator, enhancing transparency for users.

Parsons told the CAI is in discussions with global governments to not only advocate for their standard as a global norm but also to encourage its adoption. He stressed the significance of this, particularly in an election context, noting, “In an election year, it’s critical that when material is released from offices like that of PM Modi, its authenticity is verifiable. There have been instances where this was not the case, so ensuring that the public, fact-checkers, and digital platforms can trust the authenticity of such communications is paramount,” he said.

Parsons acknowledged the unique challenges India faces in combating misinformation due to its large population and diverse languages and demographics, advocating for straightforward labeling as a solution.

He explained, “That’s a little ‘CR’… just two letters, like in many Adobe tools, signaling that there’s additional context available.”

The discussion then turned to the motives behind tech companies’ support for AI safety measures. Is their involvement driven by genuine concern, or is it a strategy to influence policymaking while appearing concerned?

Addressing this skepticism, Parsons pointed out, “There’s little controversy among the involved companies. Those who joined the Munich accord, including Adobe, set aside competition, uniting over a cause we all recognize as crucial.”