Mistral AI announced a collaboration with Microsoft, enabling access to Mistral Large through Azure AI Studio and Azure Machine Learning.

The France-based startup Mistral AI is making waves in the AI industry with the introduction of its new large language model (LLM), Mistral Large, aiming to compete with leading names in the field.

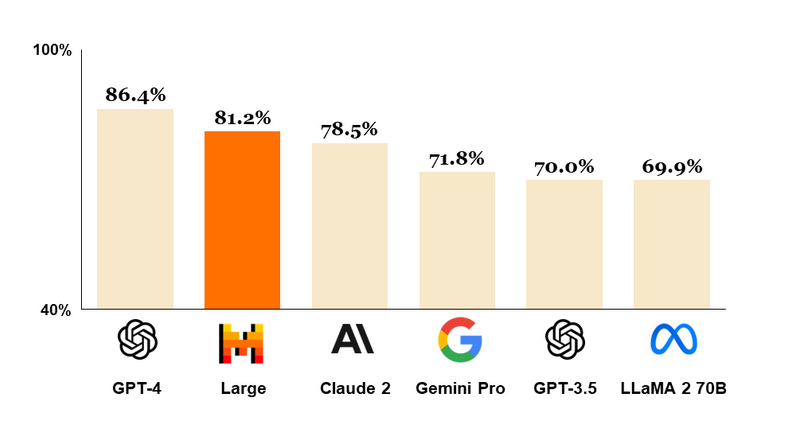

In a recent announcement on February 26, the Paris-headquartered company highlighted that Mistral Large surpassed the performance of several renowned LLMs in a “multitask language understanding” evaluation, only falling short of GPT-4. The model also showed impressive capabilities in various math and coding challenges.

Despite these achievements, Mistral Large has not been directly compared with some of the latest models like xAI’s Grok and Google’s Gemini Ultra, which were unveiled in November of the previous year and early February, respectively. Inquiries for further details have been made to Mistral AI by Cointelegraph.

Guillaume Lample, co-founder and chief scientist at Mistral AI, expressed that Mistral Large significantly outperforms the company’s previous models. Adding to their portfolio, Mistral AI introduced “Le Chat,” an AI-powered chat interface developed atop its models, drawing a parallel to how ChatGPT operates on GPT-3.5 and GPT-4 frameworks.

Mistral AI, having secured a substantial $487 million in funding this past December from prominent investors such as Nvidia, Salesforce, and Andreessen Horowitz, boasts that its Mistral Large model has a vast vocabulary exceeding 20,000 English words. Additionally, the model is proficient in multiple languages, including French, Spanish, German, and Italian.

Mistral AI initially released its first model under an open-source license, but its latest offering, Mistral Large, takes a different path. It is a closed, proprietary model, aligning it with recent large language models (LLMs) launched by OpenAI. This shift has led to some disappointment among enthusiasts on the social platform X.

In the competitive landscape of AI chatbots, Mistral AI has made its mark. While its new model, Mistral Large, has yet to be evaluated, its predecessor, Mistral Medium, secured the sixth spot in Chatbot Arena’s rankings. This platform assesses over sixty LLMs, providing insights into their capabilities.

Chatbot Arena employs a unique methodology for its rankings. It uses the Bradley-Terry model, which incorporates thousands of pairwise ratings and random sampling to calculate an “Elo” rating. This rating system predicts the likelihood of one model winning over another in direct competition.

Adding to its advancements, Mistral AI recently entered into a strategic partnership with Microsoft. This collaboration aims to broaden the accessibility of Mistral Large, making it available on Azure AI Studio and Azure Machine Learning platforms.

Mistral AI expressed enthusiasm about their collaboration with Microsoft, highlighting it as a significant milestone. They remarked, “Microsoft’s trust in our model is a step forward in our journey,” underscoring the importance of this partnership for their commercial-focused large language model (LLM), Mistral Large.

This collaboration will leverage Azure’s advanced “supercomputing infrastructure” for both training Mistral Large and enhancing its capabilities. Additionally, Mistral AI and Microsoft have committed to jointly working on AI research and development. Eric Boyd, the corporate vice president of Microsoft’s Azure AI Platform, confirmed this cooperative effort in a statement on February 26.

Mistral Large is priced at $8 for every one million tokens of input and $24 for every million tokens of output. This pricing positions it as marginally less expensive than GPT-4 Turbo, which costs $10 for input and $30 for output per million tokens.

As of last December, Bloomberg reported that Mistral’s valuation was close to $2 billion.