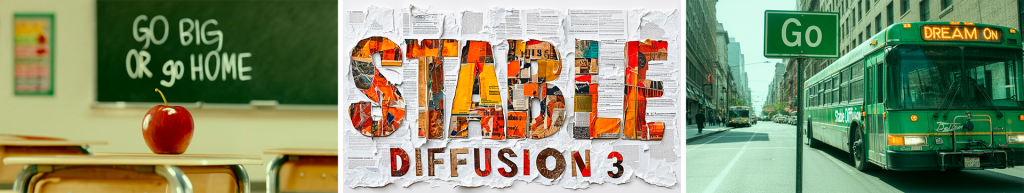

The London-based AI research firm Stability AI has unveiled an early look at Stable Diffusion 3, its latest text-to-image model. This cutting-edge generative AI model is designed to produce high-quality images from textual descriptions, offering enhancements in several critical aspects.

This revelation closely follows the introduction of Sora by Stability AI’s main competitor, OpenAI, which is a new AI model capable of generating almost lifelike, high-definition videos from straightforward text descriptions.

Although Sora has not yet been released to the public and has raised concerns regarding its ability to produce realistic counterfeit videos, OpenAI has communicated its commitment to collaborating with misinformation and hate speech specialists to evaluate the tool prior to its broad release.

Stable Diffusion 3 stands out for its significantly improved capability in generating images with multiple subjects from complex prompts. This advancement enables users to input more intricate descriptions featuring various elements and still obtain superior outcomes.

Beyond handling complex prompts more effectively, the new model also showcases enhanced overall image fidelity and spelling precision. Stability AI highlights that these improvements address some of the consistency and coherence challenges that have affected earlier generations of text-to-image models.

Although Stable Diffusion 3 is not yet accessible to the public, Stability AI has established a waitlist for those interested in gaining early access to the model. This initial phase is aimed at collecting user feedback to fine-tune the model in preparation for its comprehensive launch slated for later in the year.

Similar to OpenAI’s methodology with its Sora model, Stability AI is engaging with specialists to evaluate Stable Diffusion 3, focusing on minimizing potential negative impacts.

Stability AI emphasizes its commitment to the ethical development and deployment of AI technologies. “We are dedicated to upholding safe and responsible AI practices. Our approach includes proactive measures to deter misuse of Stable Diffusion 3 by malicious entities. The foundation of safety is embedded in our model development process, extending through testing, assessment, and deployment,” the company stated.

In anticipation of the early preview, Stability AI has implemented several protective measures. The company aims to foster innovation while maintaining ethical standards by working closely with the research community, industry experts, and users. This collaborative effort is expected to guide the model towards a successful and responsible public introduction.

Stable Diffusion 3 comes in a variety of model sizes, ranging from 800 million to 8 billion parameters. This diverse offering is intended to strike a balance between creative capabilities and the computational resources available to different users.

Stability AI remains committed to its principle of making generative AI technology open, secure, and accessible to all. “Our dedication to ensuring that generative AI remains open, safe, and universally accessible is unwavering,” the company articulated.

By providing a broad spectrum of model sizes with Stable Diffusion 3, Stability AI aims to deliver flexible solutions that cater to the needs of individuals, developers, and businesses. This approach is part of the company’s broader mission to unlock human potential through innovation, enabling users to fully express their creativity.