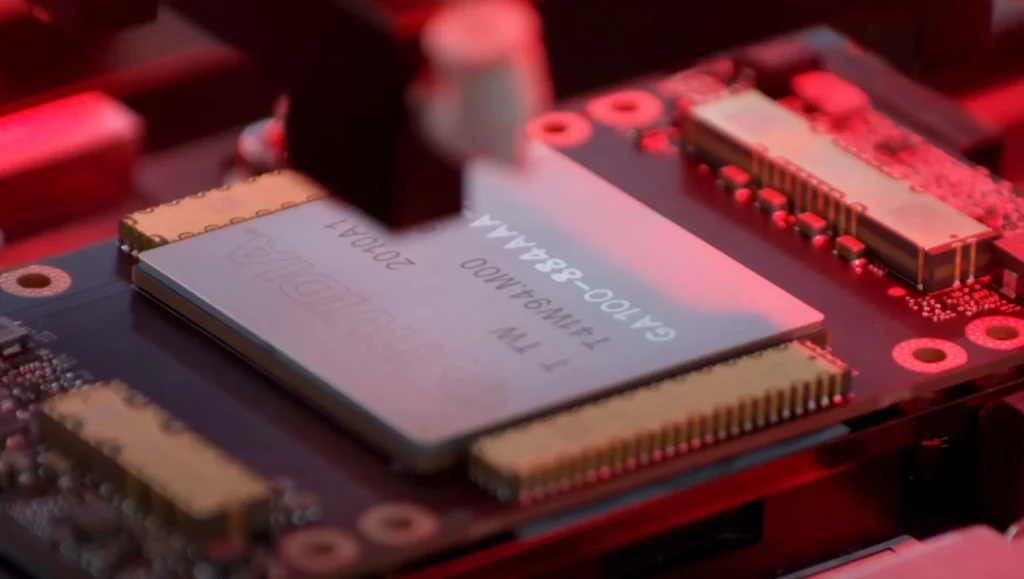

As AI projects thrive and the physical constraints of silicon become apparent, certain startups are challenging Nvidia’s supremacy and asserting that it’s time for a complete reinvention of the computer chip.

Making progress in artificial intelligence is getting really expensive, almost like an extravagant daydream from ChatGPT. The graphics chips, called GPUs, needed for big AI training, are now incredibly expensive. OpenAI revealed that training the algorithm behind ChatGPT cost them over $100 million. The rush to be competitive in AI is also causing a problem – data centers are using a lot of energy, and that’s not good.

The AI gold rush has spurred some startups to hatch ambitious plans to develop new tools for the trade. While Nvidia’s GPUs currently dominate the AI development hardware scene, these emerging companies contend that it’s high time for a revolutionary overhaul of computer chip design.

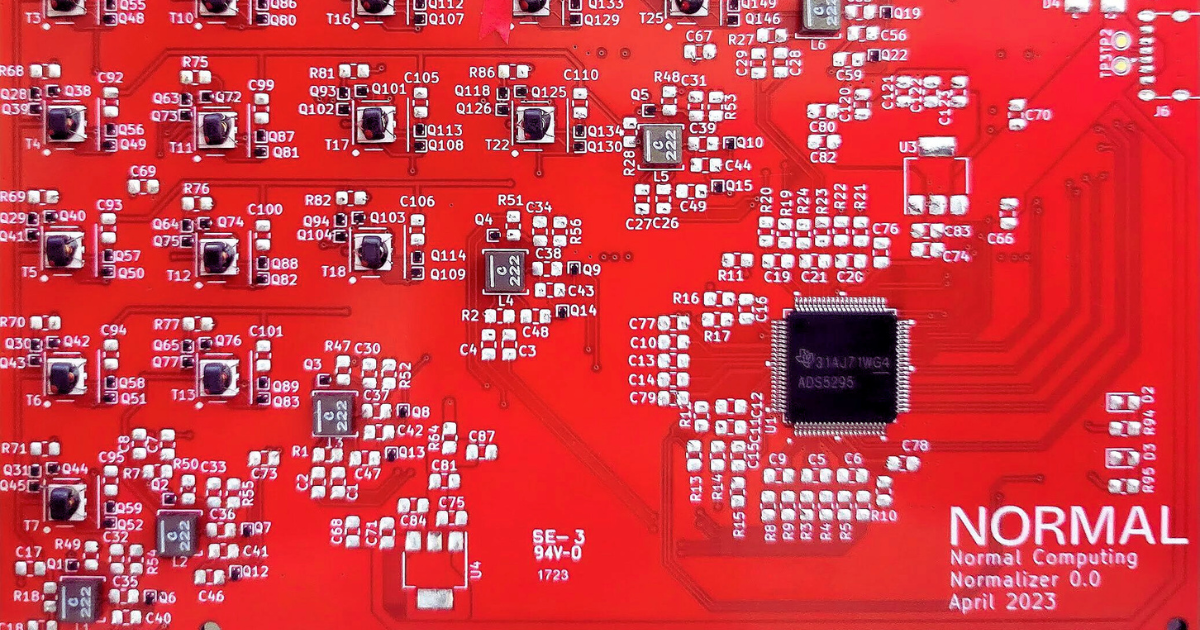

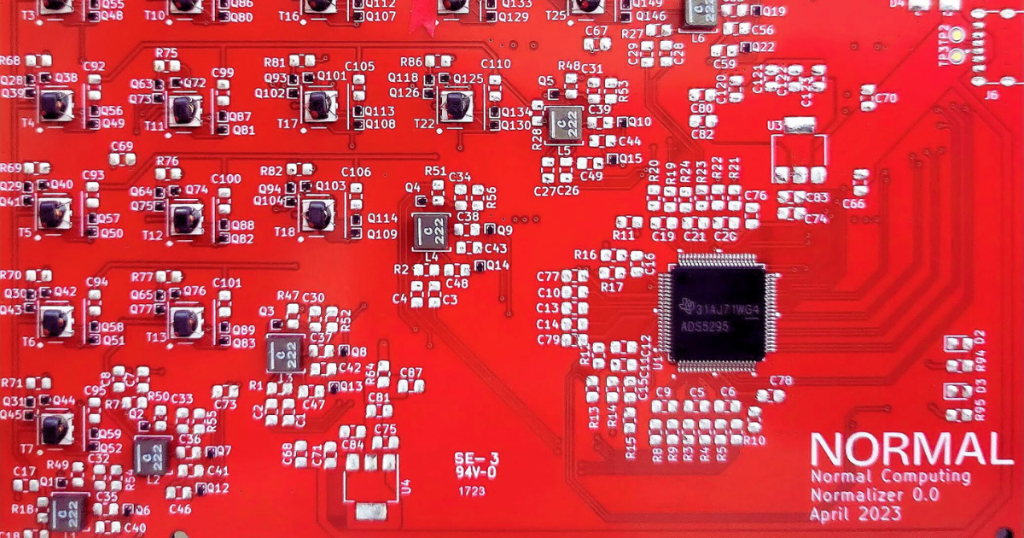

Normal Computing, a startup established by former members of Google Brain and Alphabet’s moonshot lab X, has crafted a basic prototype, marking an initial stride toward reimagining computing from its foundational principles.

A typical silicon chip conducts computations by manipulating binary bits, which are 0s and 1s representing information. In contrast, Normal Computing’s stochastic processing unit, or SPU, leverages the thermodynamic characteristics of electrical oscillators to execute calculations using the random fluctuations within the circuits. This approach can produce random samples beneficial for computations or for solving linear algebra problems, widely applicable in science, engineering, and machine learning.

Faris Sbahi, the CEO of Normal Computing, clarifies that their hardware is not only highly efficient but also apt for managing statistical calculations. This capability could potentially be valuable for constructing AI algorithms capable of dealing with uncertainty, potentially mitigating the tendency of large language models to produce inaccurate outputs when faced with uncertainty.

Sbahi notes that while the recent achievements in generative AI are noteworthy, they represent only an intermediate stage in the technology’s evolution. “It’s evident that there’s something superior in terms of both software architectures and hardware,” says Sbahi. He and his co-founders, with prior experience in quantum computing and AI at Alphabet, redirected their focus when progress in utilizing quantum computers for machine learning proved challenging. This led them to explore alternative methods of leveraging physics for the computations essential to AI.

Another team of ex-quantum researchers at Alphabet left to establish Extropic, a company still in stealth, with what seems to be an even more ambitious plan for using thermodynamic computing for AI.

Guillaume Verdon, founder and CEO of Extropic, explains, “We’re trying to do all of neural computing tightly integrated in an analog thermodynamic chip. We are taking our learnings from quantum computing software and hardware and bringing it to the full-stack thermodynamic paradigm.”

Verdon, recently revealed as the person behind the popular meme account X Beff Jezos, is associated with the effective accelerationism movement. This movement promotes the idea of progress toward a “technocapital singularity.”

People are starting to think that we need to reconsider how computers work because of the challenges with Moore’s Law, which predicts that the density of components on chips will keep shrinking. Even if Moore’s Law was not slowing down, there is still a big problem because the sizes of models that companies like OpenAI release are growing much faster than what chips can handle. In simple terms, we might need to find new ways of computing to keep up with the demands of AI.

Companies like Normal, Extropic, and Vaire Computing are exploring new ways of building computer chips, suggesting potential competition for GPUs soon. Vaire Computing, a UK startup, is working on silicon chips that function differently from traditional ones, performing calculations more efficiently. Reversible computing, as it’s called, was proposed decades ago but never became widespread. Rodolfo Rosini, Vaire’s CEO, believes that traditional chips, including GPUs, may be reaching their limits due to challenges in managing heat as components get smaller. This indicates a need for new approaches to computing.

Persuading a long-established industry to let go of a technology it has relied on for over 50 years is a formidable challenge. However, for the company that introduces the next hardware platform, the rewards could be immense. Andrew Scott of 7percent Ventures, supporting Vaire, likens it to transformative technologies such as jet engines, transistor microchips, or quantum computers that have had a profound impact on humanity. Investors supporting Extropic and Normal share similar aspirations for their respective reimaginings of computing.

Even more unconventional concepts, such as exploring alternatives to using electricity within computer hardware, are gaining attention. Peter McMahon’s research lab, for example, is exploring the use of light to compute information as a way to conserve energy. At a conference organized by McMahon in Aspen, Colorado, a team of researchers from Holland presented an intriguing concept—a mechanical cochlear implant that utilizes sound waves to fuel its computations. These innovative ideas suggest a broader exploration of energy-efficient computing methods.

It’s tempting to overlook chatbots, but the excitement generated by ChatGPT might be paving the way for transformative changes beyond just AI software.