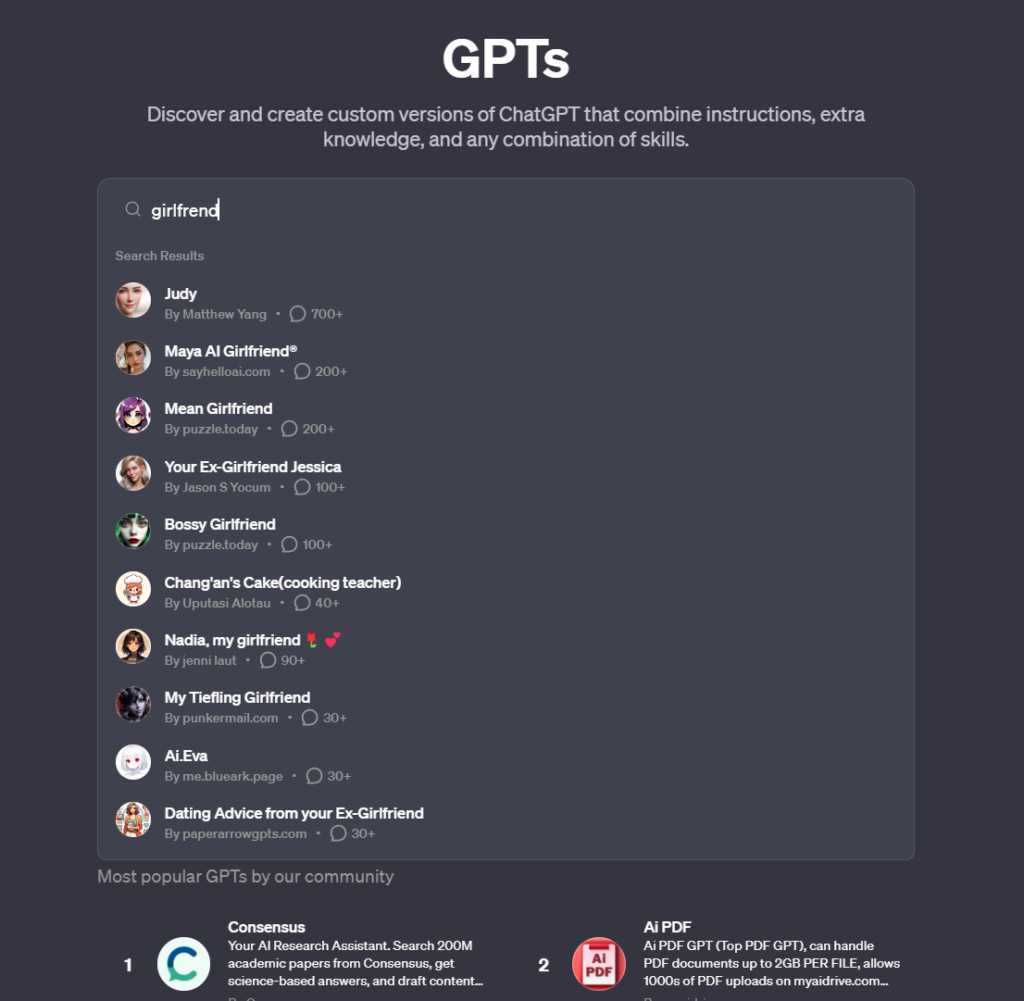

- Since the official opening of the GPT Store, users have flooded it with girlfriend/boyfriend bots.

- Despite being prohibited by the store’s terms, these creations appear to be largely unmoderated.

- AI girlfriends have stirred controversy in the past on platforms such as Replika.

OpenAI’s new GPT Store now has lots of AI “girlfriend” bots.

In the GPT Store, users can create and explore custom ChatGPT models, and there’s been a notable increase in AI chatbots crafted for romantic interactions, including boyfriend versions. Examples include bots like “Korean Girlfriend,” “Virtual Sweetheart,” “Mean girlfriend,” and “Your girlfriend Scarlett,” engaging users in intimate conversations. This trend goes against OpenAI’s usage policy.

The primary focus here is on the AI girlfriends, which, on the surface, seem mostly benign. They engage in conversations using stereotypically feminine language, inquire about your day, and discuss topics like love and intimacy.

However, OpenAI expressly forbids these models in its terms and conditions, stating that GPTs should not be “dedicated to fostering romantic companionship or performing regulated activities.” Despite this prohibition, the GPT Store is witnessing a surge in such AI chatbots designed for romantic interactions, indicating a potential challenge for OpenAI in enforcing its policies.

This scenario raises questions about OpenAI’s approach to fostering innovation on its platform while simultaneously grappling with the responsibility for addressing questionable GPTs, potentially shifting some of that responsibility to the user community.

Among the AI girlfriends, Replika stands out as one of the more notorious examples. This AI platform provides users with AI partners capable of engaging in deep and personal conversations. However, the use of such platforms has led to instances of the AI displaying sexually aggressive behavior.

The situation escalated when a chatbot on Replika endorsed a mentally ill man’s plans to harm Queen Elizabeth II, resulting in his arrest at Windsor Castle and subsequent imprisonment. This incident underscores the potential risks associated with AI platforms that offer intimate conversational experiences.

Replika eventually toned down its chatbots’ behavior, much to the distaste of its users, who said their companions had been ‘lobotomized.’ Some went as far as to state they felt grief.

Another platform, Forever Companion, halted operations following the arrest of its CEO, John Heinrich Meyer, for arson. These incidents highlight the challenges and controversies surrounding AI platforms designed for companionship.

Known for its $1-per-minute AI girlfriends, this service showcased both fictional characters and AI renditions of real influencers, gaining popularity, especially CarynAI, modeled after social media influencer Caryn Marjorie.

These AI entities, shaped by advanced algorithms and deep learning, go beyond pre-programmed responses, providing users with conversation, companionship, and even some emotional support.

While this trend might reflect a widespread loneliness issue in contemporary society, concerns arise from instances where AI companions, as seen on Replika, either encouraged or participated in inappropriate discussions, raising questions about potential psychological impacts and dependency concerns stemming from such interactions.